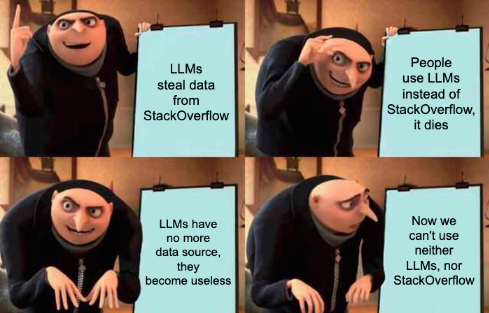

@glyph the story in four acts we are about to see (just one example, but could be Wikipedia, or anything else as well)

A teacher “learning more from their students” is such a common observation that it is a cliché. Colleagues mutually learn from each other in professional settings. Actual artists are in conversation with one another, not just learning from a static historical canon. Etc, etc.

LLMs cannot do this. The output that an LLM produces contains a sort of poisonous residue that makes it destroy the reasoning capacity of other LLMs; this is a well-known problem in the field, known as "model collapse".

Thus, when an LLM absorbs some stolen data, what is happening cannot be 'learning'; it's something else. When we call it 'training', that's a metaphor, not a description. In reality, it is a parasitic activity that requires fresh non-LLM-generated information from humans in order to be sustainable.

@glyph the story in four acts we are about to see (just one example, but could be Wikipedia, or anything else as well)

Call it a conspiracy, but I'm still convinced that #StackOverflow is dying primarily because the marketing for CodeGen AI demotivated a lot of people to the point that they do not even want to program anything anymore.

I've seen a lot of (what could have been) Juniors avoid doing anything with code because AI will be able to do it in 5 years anyway so there is no point in learning it.

And then the large layoffs, so the remaining ones are probably too busy to write answers there

@agowa338 @glyph Well, yeah, kind of. Also, StackExchange company themselves pushed AI to SO, so they helped it happen. But... same argument can be made about any other service, for example, if we stop using Wikipedia and switch to LLMs, same thing will happen. If we stop using our brains and switch to LLMs, we would end brain dead.

You missed my point. My point was that people aren't moving towards LLMs but instead stopping to code all together and do other things instead...

If people were actually moving towards LLMs you'd see a lot of threads with people not understanding their own code and having to figure out the complex issues that LLM generated code causes (aka you'd see an uptick in people having to troubleshoot their own AI generated code hallucinations)...